The question of how tests should be performed ideally leads to multifaceted concepts. Today, we want to look at one particular point concerning the selection of scenes that should ultimately represent the performance of a particular game.

An ice-cold metaphor

Imagine that you want to take a trip to a country with a harsh and Nordic climate. The trip involves a decent distance and includes not only flat areas and lakes, but also mountains. This time you want to get really far towards the top. Of course, you have to plan the right clothes for it. In the valleys and flat areas it is relatively warm between 10-20°, but on the mountain it can get really cold, -10° and even less. On average you can expect about 15°. If you plan with this averaged temperature, a few sweaters would be enough. But you have to plan exactly the way that your clothes protect you from the coldest day on the mountain to not freeze to death. This is the worst case principle

The right scene

First, let's apply the metaphor to the selection of a benchmark scene. Games exhibit a natural variability in frame rates. There are demanding and less demanding scenes, which can be distributed arbitrarily. One thing in advance, of course "worst case" doesn't mean a loading sequence that has a frame rate of 10 frames per second, for example, and shows massive stuttering. It is about real gameplay.

If you plan the hardware for a certain game, you have to prepare for the most demanding scenes, comparable to the coldest temperature on the mountain from the metaphor. It's of less use if you have an average of 60 FPS, but on the other hand there are levels/areas where the framerate drops below 30 frames per second.

How representative is Worst Case?

To answer this question 100% correctly, you would have to capture the entire game. All variants and cases have to be covered. But not only that, different settings are undoubtedly part of the test scenarios. This is an unsolvable problem from a practical point of view. So the way to go is to estimate (lower bound) the performance by combining a demanding scene with demanding settings. By applying the worst-case method, the supposedly impossible is achieved: you have a representative statement about the performance.

A few practical stumbling blocks

Finding such a scene is sometimes not that easy, since ultimately large parts of the game have to be covered. It also requires a certain amount of experience and the right tools to accomplish this challenge.

You have to at least stick to a minimum of reality. The loading scene mentioned at the beginning should not be used as a worst-case scenario, but likewise, certain scenes or actions that obviously happen extremely rarely should be excluded. Excessively high settings, which lead to an unplayable frame rate even when using the strongest hardware, should be avoided. A distinction between hardware and gaming tests is very useful. A maximum stress level makes sense for the former, whereas realistic conditions that enable a smooth gaming experience are interesting for game tests.

The question of all questions

Custom scene or built-in benchmark? I would like to dedicate a separate article to this topic, so just a few words about it and a simple example using Ghost Recon Breakpoint.

The advantages of built-in benchmark are obvious: high repeatability (with some exceptions), low effort and this kind of benchmarks can even be automated in the end. If there wasn't the one big problem. Built-in benchmarks are sometimes very unrealistic in terms of achievable frame rates in contrast to demanding scenes. Moreover, manufacturers can optimize specifically for certain scenes. We casually call this the "VW effect".

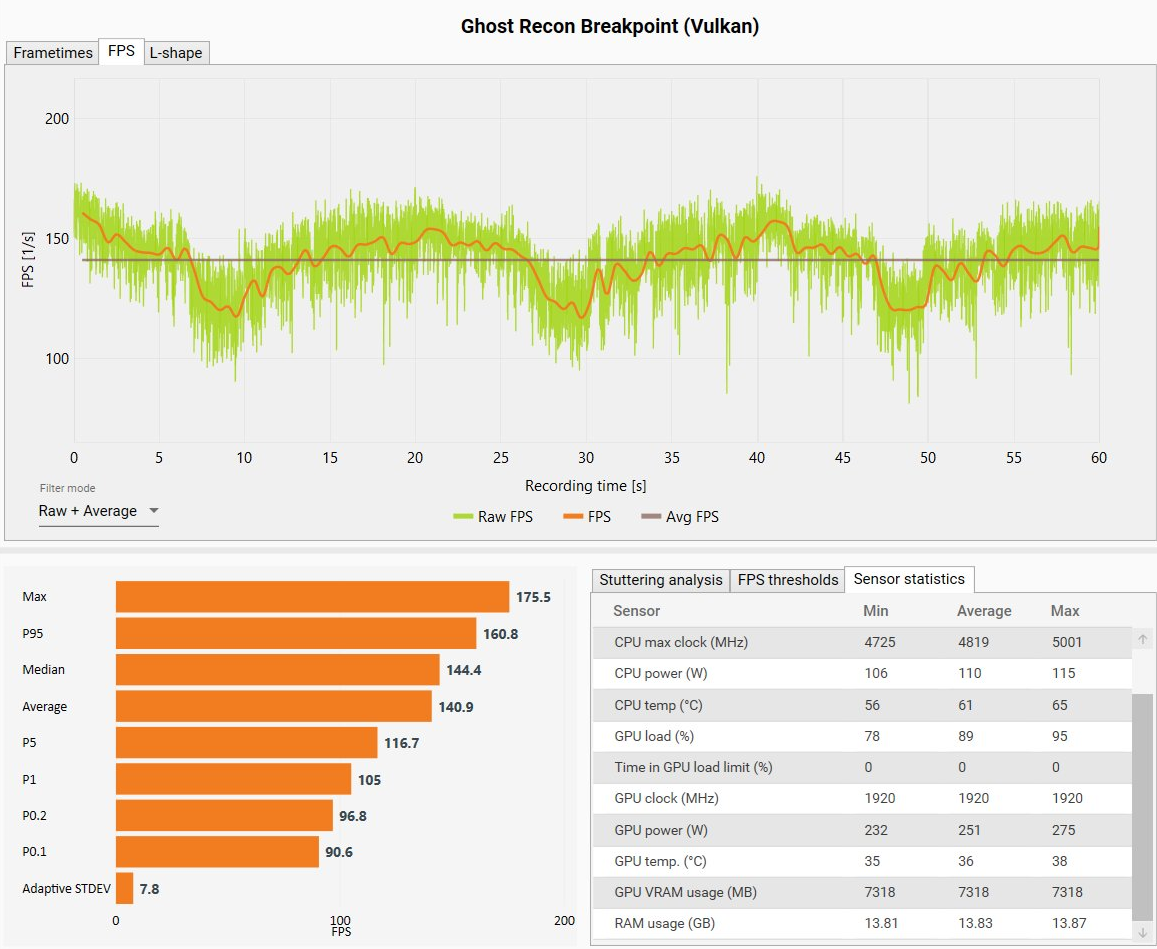

As an example, this is the built-in benchmark of Ghost Recon Breakpoint versus a demanding custom scene. The benchmarks were run at 1080p with high settings.

Captured frame rate with CapFrameX. 20 seconds duration per run.

Results of the integrated benchmark using identical settings.

We get 40% more avg FPS compared to the custom scene. The difference is substantial and can make the difference between smooth and not smooth at a lower end of the range. I'll shed more light on this in a follow up article.

How good is Worst Case?

There are definitely some critical voices that blame the method for having a lack of practicality. It depends on the selection of the scene and the settings in the individual case. It is important to keep a certain realism. But in the end it is an essential approach to be able to estimate performance. Games can't be fully measured, so the worst-case method offers a feasible way to make statements about the recommended hardware. Built-in benchmarks are often too optimistic in terms of expected performance and selecting a suitable custom scene requires experience and time.