A CPU test for a specific game, why doing that? What about the graphics card? What is so special about Fortnite? So many questions, but also clear answers. The CPU is the most important component when playing Fortnite, or more precisely, when you want to competitively play it with as high FPS and low input lag as possible. Combined with a powerful graphics card, Fortnite always runs CPU limited. The game is still very popular, so there is some interest in finding out which hardware is the best in the end.

Fortnite... a nightmare for testers

Testing Fortnite is anything but fun and it's not easy either. Actually, we had even planned to include the game in the 2021 CPU benchmark suite. There was a lot of resistance in the team, but in the end we gave the title a chance. During the testing phase, it turned out that the skepticism was justified. We even had to hack the replay (binary file) to have a reproducible basis. Several updates in a short time invalidated the replay. Furthermore, the updates affected the performance, so that entire test series had to be repeated. In the end, today's update (Season 6 Chapter 2) invalidated the replay finally in an unsolvable/unhackable way, so that certain tests could no longer be run. We decided to publish the test anyway, as the data base is sufficient.

Support from the community

Without the help from the community we would not have had a chance to make a reasonable test. Special thanks therefore goes to Twitter user Normeeen, who used his contacts with the scene to get us the replays. He also advised us on which settings are ideally used competitively in practice.

The replay used for the test was provided by Twitter user Serhat. Many thanks for that at this point.

How we proceeded

What is an appropriate way to figure out which is the best CPU? Doing some benchmarks, comparing the numbers and then it's done.... straight forward, so to speak.

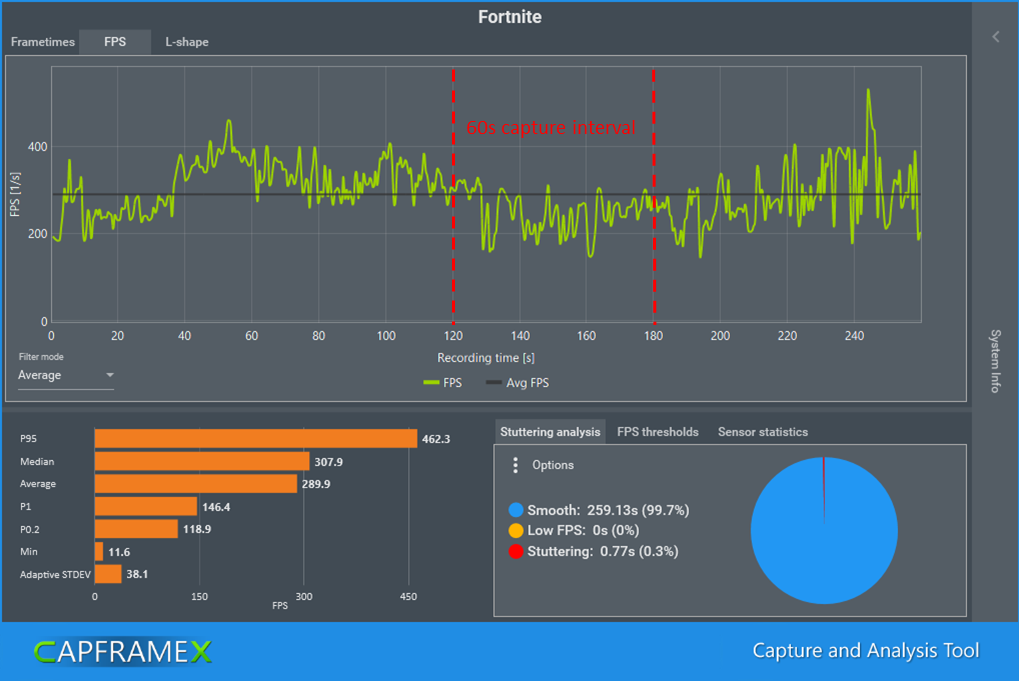

This would be nice, but it's not that simple in the end. We received a total of three replays from Serhat. We captured them completely with CapFrameX to find out where the strongest drops (not spikes, but longer drops) in the FPS curve progressions are. Unsurprisingly, this is the case in the endgame, namely when many players are acting in a confined space. The fact that this phase is particularly performance-critical underpinned the decision to use a specific scene. However, the linked video still shows the old settings using DirectX 11. During the testing, we changed the API because the old performance mode caused massive problems for many players after one of the updates. This is another example of highly dynamic testing conditions for this title.

The graphic shows the last 4 minutes of the replay that was ultimately used. It is the performance-critical endgame phase. The selected 60 second interval has the lowest average FPS and shows strong drops. So we went with the Worst Case Method here.

Settings... basically everything low

A word in advance about the resolution, so that this decision can be understood. We decided on 720p because the scene was originally planned as part of our CPU benchmark suite. So the reason was consistency. The good thing about Fortnite's strong CPU limitation at 1080p is that there's actually no difference to 720p, provided you're using a strong GPU. That was indeed the case using the RTX 3090. As a consequence the results are applicable in practice. Except for the view distance, all settings were set to Low. A maximum view distance offers tactical advantages and is therefore used accordingly by gamers.

DirectX 11 was used at the beginning of the test phase. Updates have turned the tide. DirectX 12 ran more stable for very many players and therefore we made a choice to go for the low-level API. This actually even had an impact on the winner of the benchmarks.

Test systems

Originally, tests with 8 core CPUs from AMD and Intel were planned too. This plans fell victim to Epic Games' update procedure. However, it can be stated based on preliminary tests that 8 core CPUs behave very similarly. Only 6 cores show significantly slumps in performance and are not recommended together with the DX12 API in order to achieve maximum performance.

In principle, we could have also included the Rocket Lake i7-11700K processor as an interesting test candidate. However, the current BIOS versions still cause major problems with RAM OC, so the comparison would have been quite unfair. There will probably be a follow-up test, then of course with Rocket Lake, but necessarily with a new test scene.

Intel

Stock

CPU: i9-10900K, PL1=default, PL2=default

RAM: 2933MT/s RAM clock (CL16-16-36-2T)

Mainboard: Asus Z490 Maximus XII Hero

Graphics card: RTX 3090

OC

CPU: i9-10900K, 5.2GHz core clock, 4.9GHz cache clock

RAM: 4300MT/s RAM clock (CL16-17-17-37-2T)

Mainboard: Asus Z490 Maximus XII Hero

Graphics card: RTX 3090

Stock

CPU: i5-10600K, PL1=default, PL2=default

RAM: 2933MT/s RAM clock (CL16-16-36-2T)

Mainboard: Asus Z490 Maximus XII Hero

Graphics card: RTX 3090

OC

CPU: i5-10600K, 5.0GHz core clock, 4.7GHz cache clock

RAM: 4133MT/s RAM clock (CL16-17-17-37-2T)

Mainboard: Asus Z490 Maximus XII Hero

Graphics card: RTX 3090

AMD

Stock

CPU: R9 5900X

RAM: 3200MT/s RAM clock (CL16-16-36-2T)

Mainboard: Gigabyte X570 Aorus Master

Graphics card: RTX 3090

OC

CPU: R9 5900X

RAM: 3800MT/s RAM clock (CL16-16-16-34-1T)

Mainboard: Gigabyte X570 Aorus Master

Graphics card: RTX 3090

Numbers, bars, lines

The criteria used to decide the competition are as follows:

- High average FPS

- Smoothness of frame times, less spikes

- Low input lag

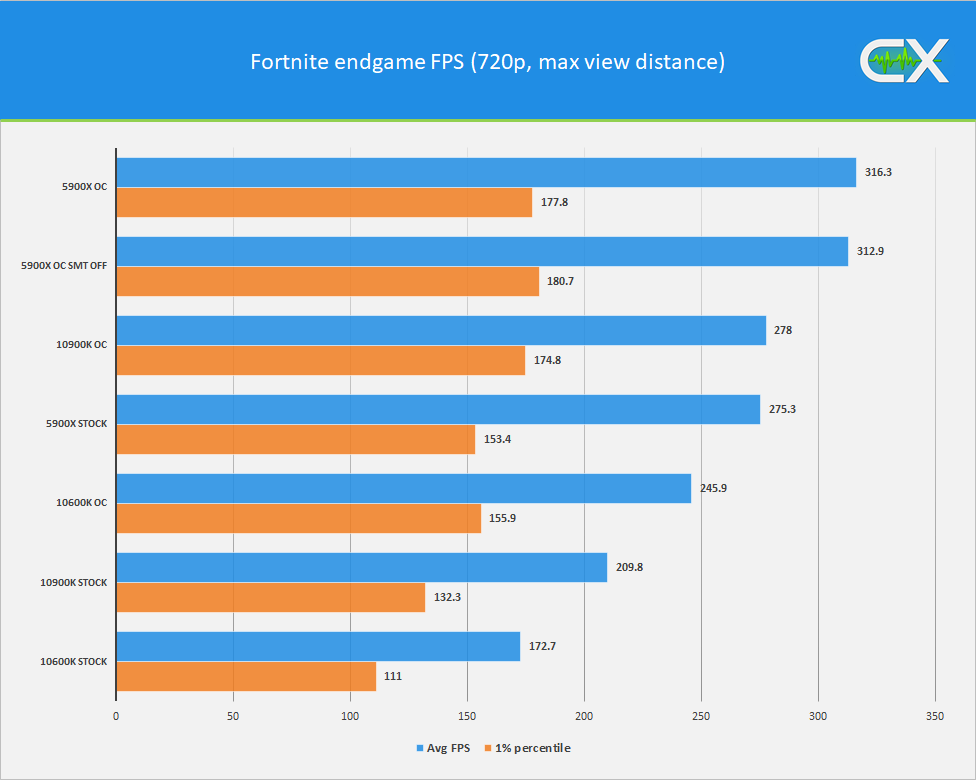

Average FPS and 1% percentile

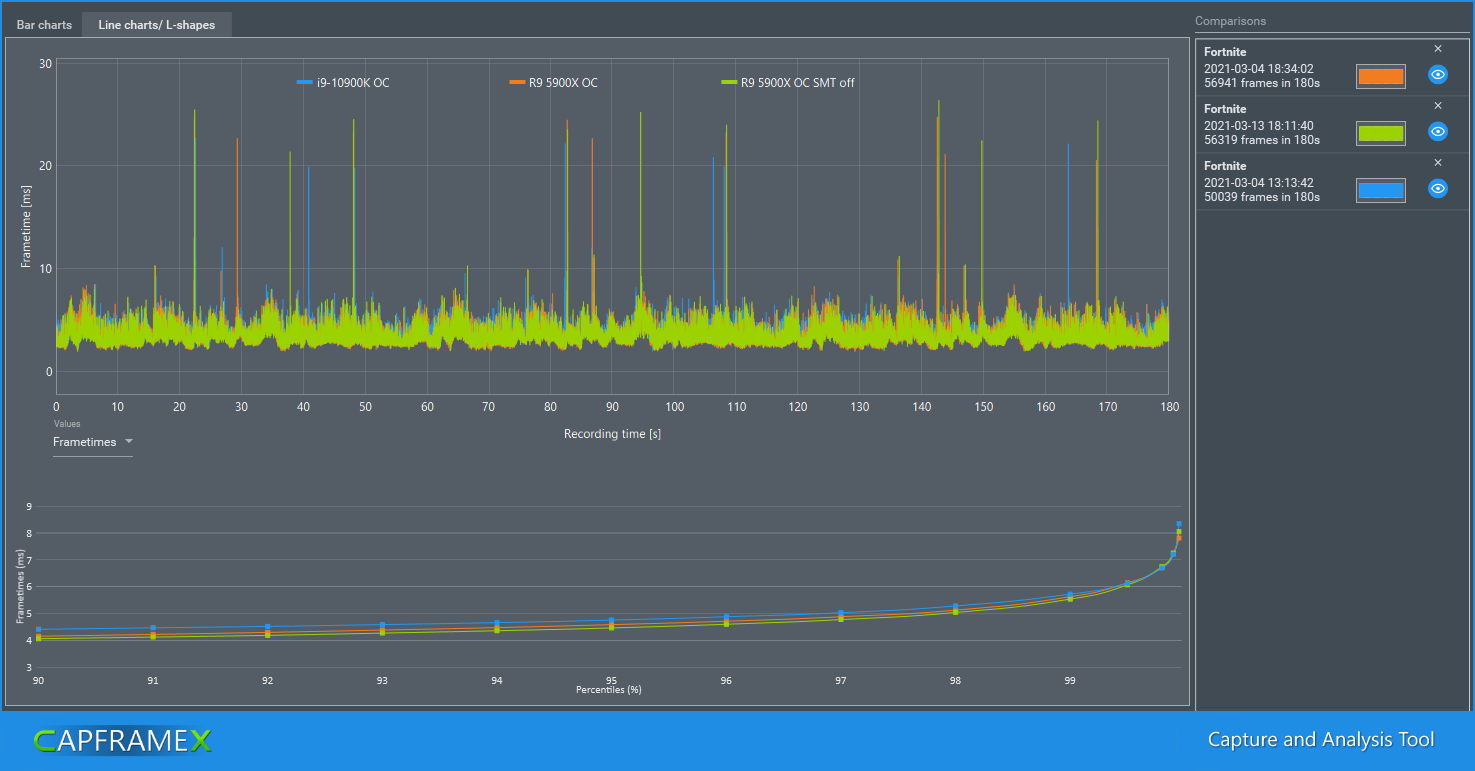

Frame times

For a better overview only the top CPUs with OC are shown in the chart. The 5900X with SMT (logical threads) off is also interesting and has been added.

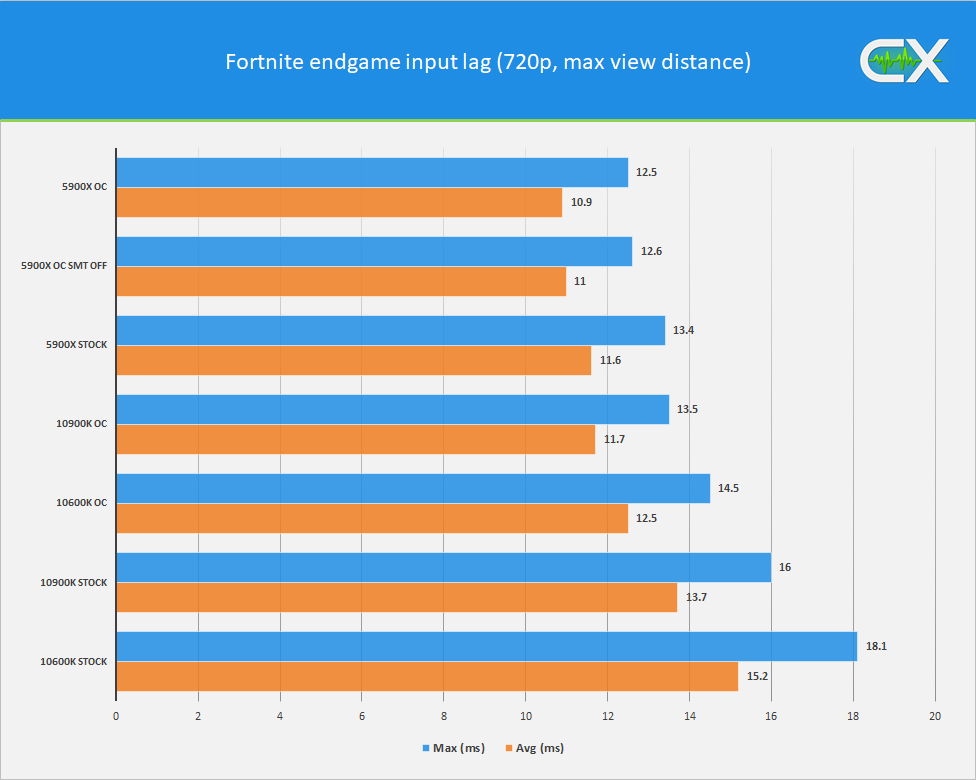

Input lag

The calculation of the input lag is a feature of CapFrameX. The values are calculated based on present states from PresentMon and thus provide a good estimate of the actual input lag.

Conclusion

The frame times hardly show any differences. Complete different situation as for the FPS and the input lag. The results speak for themselves. Ryzen 5000 are the best CPUs for Fortnite. The ratios only change marginally when using 8 cores instead. SMT basically does not matter, but it should be enabled with an 8 core CPU. 6 cores on the other hand are not optimal. Whether the performance is nevertheless sufficient is up to the individual gamer's discretion. At least an RTX 3060 Ti or RTX 3070 should be used so that the CPU can unleash its full performance. Regrettably, we did not run any tests with Big Navi graphics cards from AMD due to time constraints. The performance was not really competitive under DirectX 11. However, that should very likely have changed with DirectX 12.

A retest with better BIOS versions will show whether Rocket Lake can regain the Fornite CPU crown. Until then, Zen 3 shows its superiority and is the recommendation for gamers who want maximum performance.